| Featured | Project Banner | Title | Body | Project Impact | Client Quote (Optional) | Publications | Patents (Optional) | Brochures and Specs (Optional) | Project Media | Newsroom | Offerings | Project roadmap title | Project roadmap Desc | Project Roadmap | Acknowledgment/disclaimer (for govn’t and 3rd party projects) | PI/PL | Team Members | Primary Technical Capability | Technology Domain | Sector | Client | Research Facility | eManuscript Review | Sponsor | Collaborators | YouTube Link |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| On |

|

Entropy Economy | AI is driving innovation at lightning speed, but our focus on faster and easier must not overshadow our opportunities to lessen carbon production while optimizing energy efficient learning.

|

Energy aware machine learning (EAML) – Key to executing the Entropy Economy is the development of EAML algorithms that enable tradeoffs between HPC throughput, energy consumed, and output quality. Future work will produce EAML algorithms capable of balancing energy loads, learning from wasted/needless entropy flow loss, and adjusting to create ideal energy profiles. “We talk about ‘using’ energy, but doesn’t one of the laws of nature say that energy can’t be created or destroyed? … When we ‘use up’ one kilojoule of energy, what we’re really doing is taking one kilojoule of energy in a form that has low entropy (for example, electricity), and converting it into an exactly equal amount of energy in another form, usually one that has much higher entropy (for example, hot air or hot water). When we’ve ‘used’ the energy, it’s still there; but we normally can’t ‘use’ the energy over and over again, because only low entropy energy is ‘useful’ to us... It’s a convenient but sloppy shorthand to talk about the energy rather than the entropy…”

Brochures, Papers & PresentationsBrochure: A New Paradigm for Carbon Reduction and Energy Efficiency for the Age of AI Paper: The Entropy Economy: A New Paradigm for Carbon Reduction and Energy Efficiency for the Age of AI Paper: Using ML Training Computations for Grid Stability in 2050 Paper: Optimizing Emissions for Machine Learning Training Paper: Deep Time Series Sketching and Its Application on Industrial Time Series Clustering |

Project roadmap | |

Scott Evans | Tapan Shah, Hao Huang, Jeff Maskalunas, Achalesh Pandey | Artificial Intelligence | Artificial Intelligence | Power & Energy | Government Agencies, Strategic Partners | Off | |||||||||||||

| On |

|

5G Warehouse | GE Research and the US Department of Defense Partner on 5G Initiative to Create Smart Warehouses; read the exciting details of the project in this press release.

|

Project roadmap | |

These efforts have been sponsored by the U.S. Government under Other Transaction number W15QKN-15-9-1004 between the NSC, and the Government. The US Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the U.S. Government. | SM Hasan | 5G | Edge Computing, Embedded Computing, 5G, Controls & Optimization, Enterprise Optimization, Estimation & Modeling | Aerospace, Power & Energy, Healthcare, Advanced Manufacturing, Transportation, Defense & Security, Custom Solutions | GE Businesses, Government Agencies | R&D Facilities, Niskayuna | On | |||||||||||||

| On |

|

Distributed Real-time State Estimation for Power Distribution Grid | Distribution grid is used to provide electric power to end-customers, where voltages from transmission grid are reduced and regulated through series of transformers to customer usable levels. In contrast to transmission grids, distribution grids of today are sparsely measured and much larger and complex than transmission networks, often approaching 10’s of millions of electrical nodes. Traditionally, assessment of the current system state for distribution networks has been attempted using power flow-based approaches and a combination of limited measurements and historical load profiles coupled with heuristics. As the increasing deployment of distributed energy resources (DERs) is causing significant variation in power flow, such approaches are inadequate to provide robust and accurate estimation. This has a direct impact on real-time situational awareness and subsequent control and optimization that rely on an accurate estimate of the current system state.  The team is developing and testing more advanced methods based on dynamic state estimation that employ non-linear Kalman filtering to provide robustness to measurement errors and utilize time-series data from limited measurements. While centralized estimation approaches are very mature and used across multiple industrial assets for online monitoring and control, a fundamental challenge for the application of state estimation to electrical distribution networks is the sheer size of the problem. The team has developed a novel distributed estimation solution that is practical, scalable and can achieve the same general level of accuracy as attainable by a centralized estimator. The technology development leverages a mature, state-of-the art, in-house estimation library implemented in MATLAB®/Simulink® for rapid prototyping and testing in conjunction with automated tools to produce validated and portable software that can be deployed on a target platform using containerized microservices.

|

The distributed estimation solution has been successfully demonstrated at scale on large distribution network models like the IEEE 8500 node benchmark and is being further matured for field deployment and testing. Once commercialized, it will provide distribution operators with highly scalable, robust, and accurate real-time state estimation and a foundation for further enhanced model-based control and optimization. |

Project roadmap | |

Controls & Optimization | Controls & Optimization, Estimation & Modeling | Power & Energy, Transportation, Defense & Security | GE Businesses, Government Agencies | On | |||||||||||||||

| On |

|

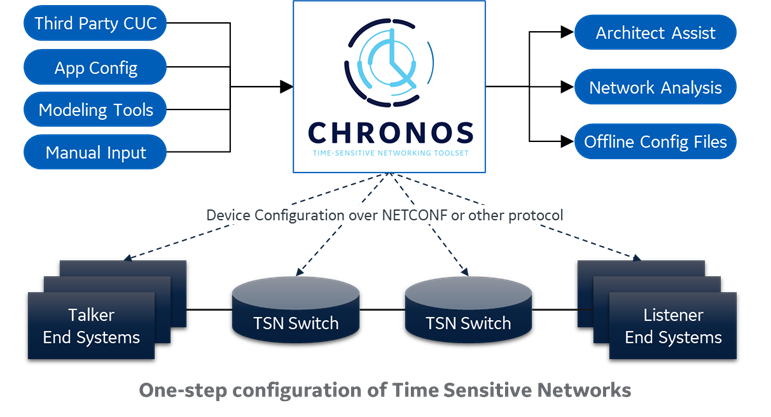

Time-Sensitive Networking (TSN) | Time-Sensitive Networking (TSN) is an evolving technology that enables the use of standard (commercial) Ethernet for industrial applications by introducing previously lacking features of reliability and determinism to commercial Ethernet. The well-known benefits of Ethernet – interoperability, scalability, evolvability, and ubiquity – combined with industrial grade performance enables a cost-efficient communication solution in aerospace, energy, automotive, and many other industries. Ethernet is defined by IEEE 802.3 and 802.1 standards. TSN comprises of a set of amendments to 802.1Q standard that address time synchronization, traffic policing and shaping, path control, frame replication and elimination, configuration, and management. Additionally, TSN profiles are being developed by standard bodies to enable cost-efficient adoption of TSN in certain verticals. GE is leading the development of TSN profile for Aerospace Ethernet – a joint project between IEEE and SAE. GE Research, in close collaboration with business units, is working on deployment of TSN based solutions in the transportation, energy, and aerospace sectors. In particular, the research team is focused on scheduling, configuration, analysis, and security of TSN in real world use cases. One outcome of this research is Chronos – a software tool that allows one-step configuration and analysis of complex time-sensitive networks as shown below.  The purpose of the Chronos toolset is to simplify TSN network deployment from start to finish. Chronos allows users to design and analyze IEEE 802.1 TSN architectures, auto-schedule mixed criticality traffic including best-effort and deterministic streams, generate network configurations for end systems and switches, statically or dynamically load configurations into network devices. Another area of development is secure time synchronization, wherein the team has added symmetric key authentication to generalized precision time protocol (gPTP) based on IEEE 1588-2008 Annex K. Using this method, participants in the time distribution tree authenticate all protocol messages using symmetric keys. Messages from participants with invalid key are suppressed, thereby eliminating them from the time distribution tree. This solution was implemented on open source linuxptp package. GE Research’s version of secure linuxptp is available at on github project: adding Annex K to linuxptp. The secure PTP work was supported by the Department of Energy under Award Number DE-OE0000894. Read more about DoE project.

|

In partnership with GE business units, the research team is helping develop and deploy a complete ecosystem of TSN hardware, software, and tools for industrial applications. This technology has been already deployed to our customers via networking products in avionics and locomotive controls. |

Project roadmap | |

Some of the material (e.g., secure time synchronization) is based upon work supported by the Department of Energy under Award Number DE-OE0000894. This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor any of their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof. | Abdul Jabbar | Industrial Networking | Embedded Computing, Industrial Networking, Controls & Optimization | Aerospace, Power & Energy, Transportation, Defense & Security | GE Businesses, Government Agencies | Off | |||||||||||||

| Off |

|

Control Co-design of Floating Offshore Wind Turbines | Floating offshore wind turbine (FOWT) technology today has a high cost compared with fixed-base offshore or onshore wind turbine technology and is therefore not an economically attractive renewable energy resource. A primary cause of these economics is that the main components of state-of-the-art FOWTs—the turbine and the floating platform—are designed independently. Currently, floating platforms are designed to be large and heavy so that the wind turbine performs as if it were installed on a fixed base. Supported by the ARPA-e ATLANTIS program, GE Research and Glosten, Inc., are collaborating to take a radically different approach to design a 12 MW light weight FOWT. The weight reductions are achieved through a process of control co-design, platform actuation, and system optimization, which we approach in this effort in three steps.  In the first step, a baseline FOWT turbine is sized, modeled, and simulated, by integrating an offshore wind turbine alike GE’s Haliade X 12 MW turbine with Glosten’s PelaStar tension leg floating (TLP) platform design. This baseline turbine serves multiple purposes. It provides a benchmark for performance and cost metrics of a state-of-the-art FOWT design. Moreover, it gives insight in key system-level design-driving dynamics, which are used in the second step. In this step, the advanced control algorithms that operate the turbine are designed concurrently with the integrated structure of the FOWT. Active tendon control will be considered to enhance the controllability of the platform dynamics. The concurrent design of structure and controls will eliminate conservatism and suboptimality of the closed-loop dynamics, resulting in lower mass of key subsystems, like tower and platform. Pursuing further Levelized Cost of Energy (LCOE) optimality in step 3, a system-level approach is taken to numerically optimize the system dynamics and component interactions by simultaneous optimization of parameters of the FOWT design and the controls. |

This project fits in the strategy of GE to develop competitive Floating Offshore Wind solutions, for which innovations are needed in the combination and integration of wind turbine technology with the variety of today’s existing floating platform technologies. The proposed design in this TLP-focused project achieves aggressive mass reductions in the platform and tower of over 35% compared to state-of-the-art FOWT designs, with a potential reduction of the resulting LCOE of more than 20% compared to the baseline. |

Project roadmap | |

The information, data, or work presented herein was funded in part by the Advanced Research Projects Agency-Energy (ARPA-E), U.S. Department of Energy, under Award Number DE-AR0001177. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof. | Estimation & Modeling | Controls & Optimization, Model-Based Controls, Estimation & Modeling | Power & Energy | GE Businesses, Government Agencies, Strategic Partners | On | ||||||||||||||

| On |

|

5G Applications | Kicked off in 2019, the 5G Mission in Forge is focused on connecting the promise of 5G to industrial use cases. Together with our partners in the telecom industry, we have established one of the nation’s first cross-industry 5G testbeds to carry out this pioneering work.

GE researchers are exploring a range of applications across multiple industries in energy, healthcare, aviation, and defense, which include real-time industrial controls for wind farms, wireless and pervasive patient monitoring, and resilient airbases. We are building a robust network of collaborators and partners, to help us have the most advanced and up-to-date industrial 5G networking capabilities available. Beyond 5G’s promise of stunningly low latency and incredibly high bandwidth, our 5G mission is exploring even more transformational capabilities under the 5G hood. One example is leveraging network slicing and virtualization to create custom segments of the network for the grid, for healthcare, or any industrial segment. Another is improving on GPS to provide more precise indoor and outdoor localization to track hazards in the environment to enhance workplace safety. And 5G will enable us to make full use of edge compute to provide enhanced privacy and security, while shifting computation closer to where it is needed.

|

The 5G mission team has partnered with LocatorX and Georgia Tech Research Institute to work on two projects totaling $36 million with the U.S. Department of Defense to modernize warehouse facilities at two military bases using smart 5G-enabled technologies. These projects will support the Department’s efforts to execute real-time planning and deployment of resources and supplies for critical missions. The team will integrate digital twin technology, autonomous robots and real-time tracking of thousands of assets and components, utilizing the power of 5G networks, to create smart warehouse facilities. Read more here. |

GE adds Verizon 5G to Testbed to explore Energy, Health Care and Aviation use cases, GE Research and the US Department of Defense Partner on 5G Initiative to Create Smart Warehouses, AT&T First to Deliver Both High and Low-band 5G Connectivity to GE Research Campus | Project roadmap | These efforts have been sponsored by the U.S. Government under Other Transaction number W15QKN-15-9-1004 between the NSC, and the Government. The US Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the U.S. Government. | SM Hasan | Sriram Boppana | Enterprise Optimization | Digital Technologies, Edge Computing, Software & Analytics, Robotics & Autonomous Systems, Controls & Optimization, Electronics & Sensing, Artificial Intelligence | Power & Energy, Healthcare, Transportation, Defense & Security | GE Businesses, Government Agencies | R&D Facilities, Niskayuna, Collaboration Spaces, Forge Lab | On | |||||||||||

| On | |

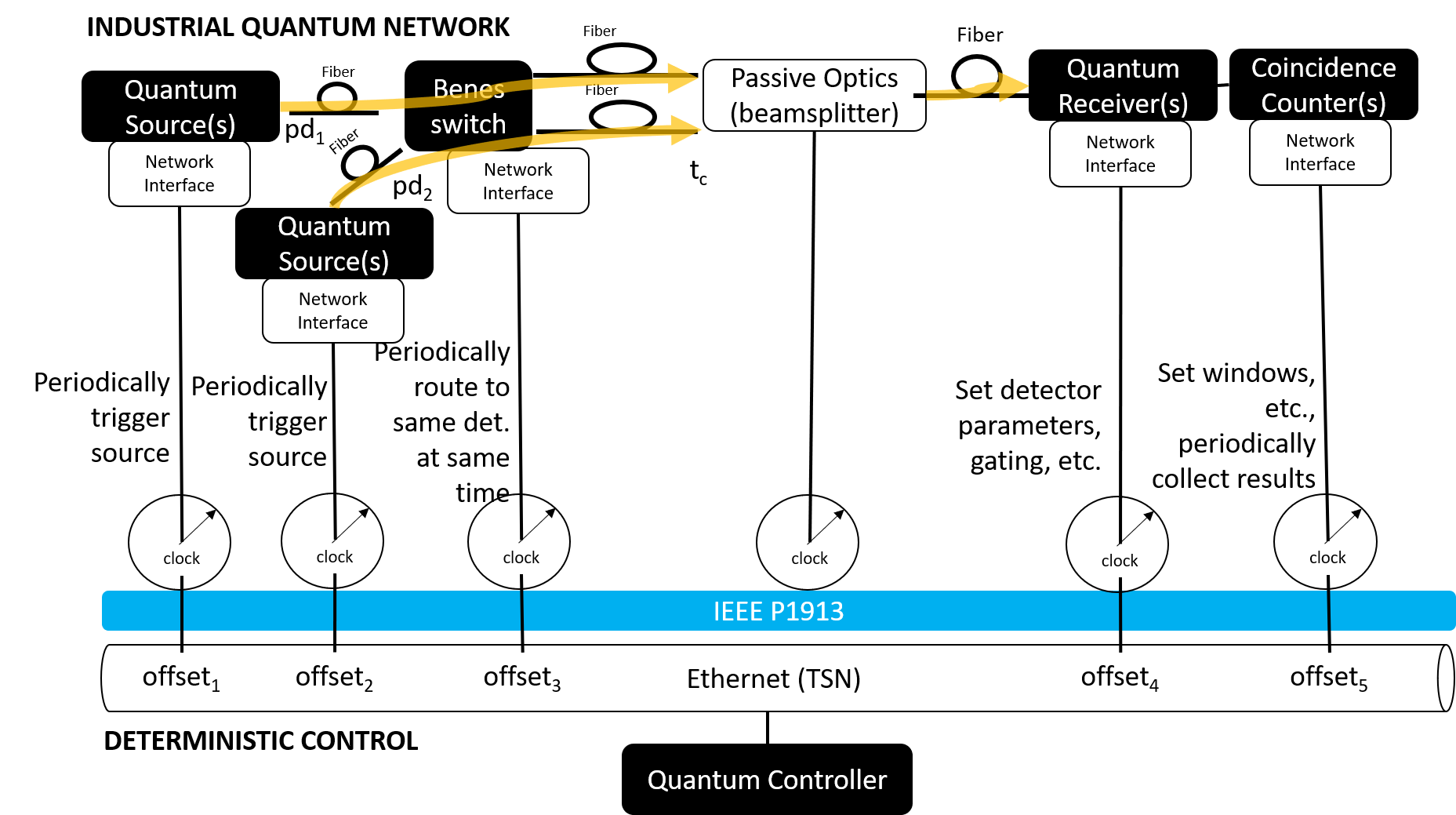

Time-Sensitive Quantum Key Distribution | Time-sensitive quantum key distribution (TSQKD) leverages time-sensitive networking (TSN) and quantum key distribution (QKD) technologies to provide industrial control networks with simpler, more deterministic, and secure communication. Since the discovery of quantum secure communication, a variety of protocols and techniques have been proposed and developed for making the technology more practical, secure, and scalable. A notable benefit of QKD is that cloning quantum state is impossible, and detection of eavesdropping is a natural part of its operation, something that today’s classical techniques, which may be broken by quantum computing, cannot currently achieve. Integrating the precise, deterministic network control enabled by time-sensitive networking (TSN) with the quantum optics required to implement quantum key distributing (QKD) results in a more secure industrial control network. QKD has been fit seamlessly into the existing, ease-to-use TSN network configuration and management process, demonstrated with GE grid intelligent electronic devices and standard utility protocols. This project has resulted in a measurement-device-independent quantum key distribution (MDI-QKD) design in a simpler, less-expensive, “plug-and-play” system implemented in a photonic integrated circuit that can be integrated directly within industrial devices that alleviates potential side-channel attacks. TSN provides the precise network control required by quantum optics to implement a control plane suitable for the quantum internet. Key benefits of TS-QKD:

We believe that standards are essential to market adoption and are contributing toward standardized quantum network management configuration and control via IEEE P1913 as illustrated in the following Figure.

In contrast to many of the efforts on QKD to extend communication distance and data rates, we suggest that, within the power grid, it is more important to enable short-distance, low-data rate encrypted communication. We propose a technique to incorporate QKD into photonic integrated circuits to enable low cost implementation of QKD across the hundreds of thousands of devices on the power grid network. To achieve a PIC implementation, we propose a means to apply the plug-and-play MDI-QKD design concept by removing Faraday mirrors at the edge device locations and greatly reducing the number of untrusted Charlie nodes that must incorporate components that are expensive and require more supporting infrastructure and routine maintenance. Estimates of key generation rates indicate that for short distances Charlie nodes can operate quite well with either InGaAs, SPADs, or SNSPDs. We believe that TSN, an integral part of the power grid network, is ideally suited to this QKD technique in which controlling the time of arrival of photons at Charlie is critical to making successful Bell state measurements.

If you are interested in teaming with GE Research for external opportunities to build upon this work toward a TSN-enabled control plane for the quantum internet, please contact [email protected]. |

GE Research Awarded $12.6 Million from the US Department of Energy to Develop Quantum-level Cybersecurity Protections for Critical Power Assets | Project roadmap | |

This material is based upon work supported by the Department of Energy under Award Number DE-OE0000894. This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor any of their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof. | Stephen Bush | Jim Bray | Cybersecurity | Edge Computing, Embedded Computing, Cybersecurity, Electronics & Sensing, Optics & Photonics | Power & Energy, Defense & Security | GE Businesses, GE Power, GE Renewable Energy, Government Agencies, DOE - National Energy Technology Laboratory (NETL) | R&D Facilities, Niskayuna, Research Labs, RF/Microwave & Photonics, Edge Compute Lab | On | |||||||||||

| Off |

|

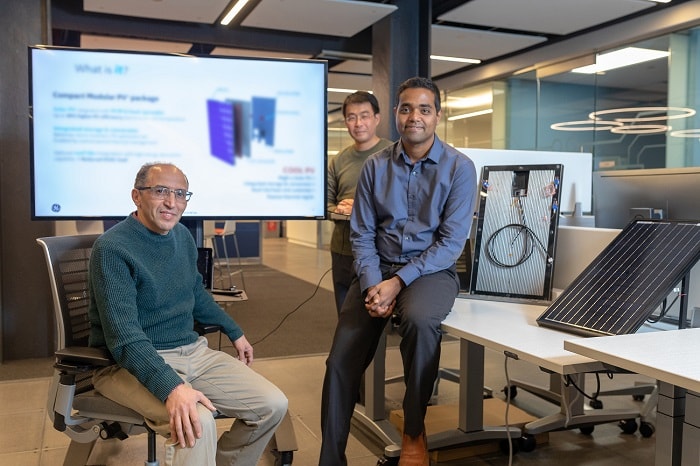

Cool PV | One of the ironies with solar power is that when the sun is at its brightest, the efficiency of converting it to electricity is lower due to the high temperatures. Also, the full ecosystem of a solar power plant installation is complex with many different components and systems required. Cool PV is a thermally enabled, modular and compact solar power plant that results in a higher efficiency and fully integrated energy system. The initial target is novel thermal management, which enables increase in efficiency by up to 30%. In addition, we are looking at novel compact system architectures to result in a plug-and-play energy solution. This has the potential to lower the balance of system and other operation and maintenance costs associated with traditional solar solutions.

|

The mission is focused first on testing on an experimental setup inside the Forge Lab, but has ambitions beyond. The goal is after initial prototyping, to install the system in the solar array on the GE Research campus, allowing for side-by-side validation of any improvements the technology makes over traditional PV (photovoltaic) architectures. Key demonstrations/milestones:

|

|

Thermosciences | Thermosciences, Electric Power | Power & Energy | GE Businesses | Niskayuna, Collaboration Spaces, Forge Lab | On | |||||||||||||||

| Off |

|

Soteria | Soteria is a powerful combination of sensing, real-time analytics, artificial intelligence (AI), and edge and cloud computing. It is the first occupational safety offering that utilizes ubiquitous sensing and analytics to keep workers safe while they perform essential job tasks. Soteria integrates data in real-time from a wide range of industrial wearables and environmental sensors to enable actionable outcomes and predictive analytics for its users. The system contains open API to integrate with GE and non-GE sensors, data storage, and flexible dashboards to view the actions and outcomes. Soteria is poised to be the first Forge mission to run a commercial pilot in 2020. This mission is a perfect storm of technology, incorporating novel proprietary and third-party wearable sensors, smart environments, edge and cloud computing, and analytics assembled with occupational safety as a demonstration use case.

|

The team built a unique and fully integrated demonstration of how – in real time – they can fuse data from a multitude of sensors to build rich analytics that transcend what any single sensor can provide. They developed a system that allows them to pick specific sensors, specific form factors, and specific analytics, all to create a custom solution.

|

|

SM Hasan | Michael Mahony | Industrial Software | Digital Technologies, Software Development, Edge Computing, Embedded Computing, Software & Analytics, Physical-Digital Analytics, Industrial Software, Controls & Optimization, Enterprise Optimization, Real-Time Optimization, Estimation & Modeling, Artificial Intelligence, Machine Learning, Computer Vision | Aerospace, Power & Energy, Healthcare, Transportation, Defense & Security | GE Businesses, GE Aviation, GE Power, GE Renewable Energy | R&D Facilities, Niskayuna, Collaboration Spaces, Forge Lab | On | |||||||||||||

| Off |

|

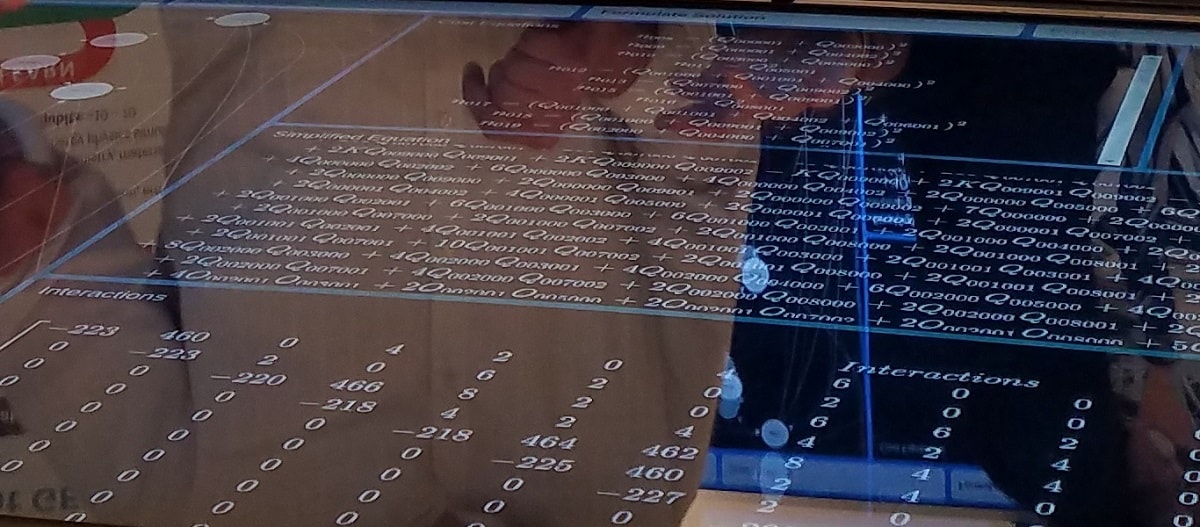

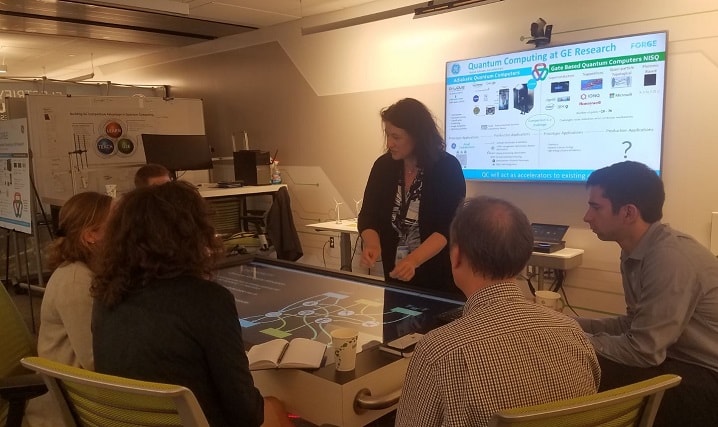

Quantum Computing | What if you could put the idea of “optimal” back on the table? At scale, so many of the world’s problems are at a level of complexity that is computationally intractable to solve through traditional means, both today and in the decades to come. We rely on heuristics to get us to good enough, and every incremental step we can take towards optimal means huge savings in efficiency and/or performance. But what if we could take a leap forward through an entirely new form of computation? That is the promise of Quantum Computing. This mission sets out to answer the question: can we run a real-world industrial algorithm on an actual quantum computer? And from there, can we break through the hype and determine when and for what type of algorithms quantum will reign supreme over traditional computation? In March 2019, GE and more than 600 visitors became “quantum initiates” when we ran our demonstration about how to decipher the future of this incredible technology.

|

This mission very quickly found its sweet spot around planning optimization as an initial use case. Through an interactive demonstration, visitors to the lab were able to take an asset sustainment problem from our Aviation service shops, see how it is formalized to be run on an adiabatic quantum computer, and in the end, ran the actual machine.

|

Asset Sustainment Demo complete

|

Real-Time Optimization | Digital Technologies, High Performance Computing, Software & Analytics, Industrial Software, Controls & Optimization, Enterprise Optimization, Real-Time Optimization | Aerospace, Power & Energy, Advanced Manufacturing, Transportation, Defense & Security | GE Businesses, GE Aviation | R&D Facilities, Niskayuna, Collaboration Spaces, Forge Lab | On |