Archive Compression

Archive Compression Overview

The Data Archiver performs archive compression procedures to conserve disk storage space. Archive compression can be used on tags populated by any method (collector, migration, File collector, SDK programs, Excel, etc.)

Archive compression is a tag property. Archive compression can be enabled or disabled on different tags and can have different deadbands.

Archive compression applies to numeric data types (scaled, float, double float, integer and double integer). It does not apply to string or blob data types. Archive compression is useful only for analog values, not digital values.

Archive compression can result in fewer raw samples stored to disk than were sent by collector.

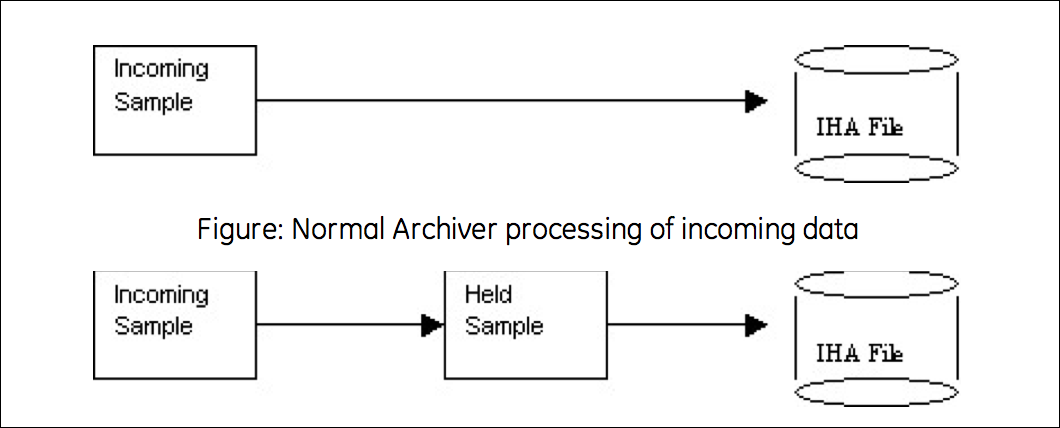

Archive Compression uses a held sample. This is a sample held in memory but not yet written to disk. The incoming sample always becomes the held sample. When an incoming sample is received, the currently- held sample is either written to disk or discarded. If the currently-held sample is always sent to disk, no compression occurs. If the currently-held sample is discarded, nothing is written to disk and storage space is conserved.

In other words, collector compression occurs when the collected incoming value is discarded. Archive compression occurs when the currently-held value is discarded.

Held samples are written to disk when archive compression is disabled or the archiver is shut down.

Archive Compression Logic

IF the incoming sample data quality = held sample data quality

IF the new point is a bad

Toss the value to avoid repeated bads. Do we toss new bad or old bad?

ELSE

Decide if the new value exceeds the archive compression deadband/

ELSE//

data quality is changed, flush held to disk

IF we have exceeded deadband or changed quality

// Store the old held sample in the archive

// Set up new deadband threshold using incoming value and value written to disk.

// Make the incoming value the held valueExample: Change of data quality

The effect of archive compression is demonstrated in the following examples.

- A change in data quality causes held samples to be stored.

- Held samples are returned only in a current value sampling mode query.

- Restarting the archiver causes the held sample to be flushed to disk.

| Time | Value | Quality |

|---|---|---|

| t) | 2 | Good |

| t1 | 2 | Bad |

| t2 | 2 | Good |

The following SQL query lets you see which data values were stored:

Select * from ihRawData where samplingmode=rawbytime and tagname =

t20.ai-1.f_cv and timestamp > today

Notice that the value at t2 does not show up in a RawByTime query because it is a held sample. The held sample would appear in a current value query, but not in any other sampling mode:

select * from ihRawData where samplingmode=CurrentValue and tagname =

t20.ai-1.f_cv

The points should accurately reflect the true time period for which the data quality was bad.

Shutting down and restarting the archiver forces it to write the held sample. Running the same SQL query would show that all 3 samples would be stored due to the change in data quality.

Archive Compression of Straight Line

| Time | Value | Quality |

|---|---|---|

| t0 | 2 | Good |

| t0+5 | 2 | Bad |

| t0+10 | 2 | Good |

| t0+15 | 2 | Good |

| t0+20 | 2 | Good |

Shut down and restart the archiver, then perform the following SQL query:

select * from ihRawData where samplingmode=rawbytime and tagname =

t20.ai-1.f_cv and timestamp > today

Only t0 and t0+20 were stored. T0 is the first point and T0+20 is the held sample written to disk on archiver shutdown, even though no deadband was exceeded.

Bad Data

| Time | Value | Quality |

|---|---|---|

| t0 | 2 | Good |

| t0+5 | 2 | Bad |

| t0+10 | 2 | Bad |

| t0+15 | 2 | Bad |

| t0+20 | 2 | Good |

| t0+25 | 3 | Good |

- The t0+5 value is stored because of the change in data quality.

- The t0+10 value is not stored because repeated bad values are not stored.

- The t0+15 value is stored when the t0+20 comes in because of a change of quality.

Disabling Archive Compression for a Tag

| Time | Value | Quality |

|---|---|---|

| t0 | 2 | Good |

| t0+5 | 10 | Good |

| t0+10 | 99 | Good |

| t0+15 | Archive compression disabled | |

- The t0 value is stored because it is the first sample.

- The t0+5 is stored when the t0+10 comes in.

- The t0+10 is stored when archive compression is disabled for the tag.

Archive Compression of Good Data

This example demonstrates that the held value is written to disk when the deadband is exceeded.

In this case, we have an upward ramping line. Assume a large archive compression deadband, such as 75% on a 0 to 100 EGU span.

| Time | Value | Quality |

|---|---|---|

| t0 | 2 | Good |

| t0+5 | 10 | Good |

| t0+10 | 10 | Good |

| t0+15 | 10 | Good |

| t0+20 | 99 | Good |

Shut down and restart the archiver, then perform the following SQL query:

select * from ihRawData where samplingmode=rawbytime and tagname =

t20.ai-1.f_cv and timestamp > today

Because of archive compression, the t0+5 and t0+10 values are not stored. The t0+15 value is stored when the t0+20 arrives. The t0+20 value would not be stored until a future sample arrives, no matter how long that takes.